AI will be used by humanitarian organisations – this could deepen neocolonial tendencies

Liu zishan/Shutterstock.com

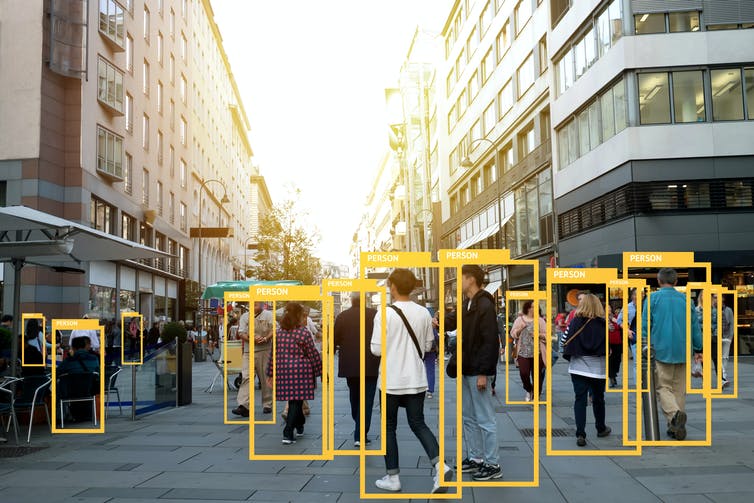

Artificial intelligence, or AI, is undergoing a period of massive expansion. This is not because computers have achieved human-like consciousness, but because of advances in machine learning, where computers learn from huge databases how to classify new data. At the cutting edge are the neural networks that have learned to recognise human faces or play Go.

Recognising patterns in data can also be used as a predictive tool. AI is being applied to echocardiograms to predict heart disease, to workplace data to predict if employees are going to leave, and to social media feeds to detect signs of incipient depression or suicidal tendencies. Any walk of life where there is abundant data – and that means pretty much every aspect of life – is being eyed up by government or business for the application of AI.

One activity that currently seems distant from AI is humanitarianism; the organisation of on-the-ground aid to fellow human beings in crisis due to war, famine or other disaster. But humanitarian organisations too will adopt AI. Why? Because it seems able to answer questions at the heart of humanitarianism – questions such as who we should save, and how to be effective at scale. AI also resonates strongly with existing modes of humanitarian thinking and doing, in particular the principles of neutrality and universality. Humanitarianism (it is believed) does not take sides, is unbiased in its application and offers aid irrespective of the particulars of a local situation.

The way machine learning consumes big data and produces predictions certainly suggests it can both grasp the enormity of the humanitarian challenge and provide a data-driven response. But the nature of machine learning operations mean they will actually deepen some of the problems of humanitarianism, and introduce new ones of their own.

The maths

Exploring these questions requires a short detour into the concrete operations of machine learning, if we are to bypass the misinformation and mystification that attaches to the term AI. Because there is no intelligence in artificial intelligence. Nor does it really learn, even though its technical name is machine learning.

AI is simply mathematical minimisation. Remember how at school you would fit a straight line to a set of points, picking the line that minimises the differences overall? Machine learning does the same for complex patterns, fitting input features to known outcomes by minimising a cost function. The result becomes a model that can be applied to new data to predict the outcome.

Any and all data can pushed through machine learning algorithms. Anything that can be reduced to numbers and tagged with an outcome can be used to create a model. The equations don’t know or care if the numbers represent Amazon sales or earthquake victims.

This banality of machine learning is also its power. It’s a generalised numerical compression of questions that matter – there are no comprehensions within the computation; the patterns indicate correlation, not causation. The only intelligence comes in the same sense as military intelligence; that is, targeting. The operations are ones of minimising the cost function in order to optimise the outcome.

And the models produced by machine learning can be hard to reverse into human reasoning. Why did it pick this person as a bad parole risk? What does that pattern represent? We can’t necessarily say. So there is an opacity at the heart of the methods. It doesn’t augment human agency but distorts it.

Compartmentalising the world.

Zapp2Photo/Shutterstock.com

Logic of the powerful

Machine learning doesn’t just make decisions without giving reasons, it

modifies our very idea of reason. That is, it changes what is knowable and what is understood as real.

For example, in some jurisdictions in the US, if an algorithm produces a prediction that an arrested person is likely to re-offend, that person will be denied bail. Pattern-finding in data becomes a calculative authority that triggers substantial consequences.

Machine learning, then, is not just a method but a machinic philosophy where abstract calculation is understood to access a truth that is seen as superior to the sense-making of ordinary perception. And as such, the calculations of data science can end up counting more than testimony.

Of course, the humanitarian field is not naive about the perils of datafication. It is well known that machine learning could propagate discrimination because it learns from social data which is itself often biased. And so humanitarian institutions will naturally be more careful than most to ensure all possible safeguards against biased training data.

But the problem goes beyond explicit prejudice. The deeper effect of machine learning is to produce the categories through which we will think about ourselves and others. Machine learning also produces a shift to preemption: foreclosing futures on the basis of correlation rather than causation. This constructs risk in the same way that Twitter determines trending topics, allocating and withholding resources in a way that algorithmically demarcates the deserving and the undeserving.

We should perhaps be particularly worried about these tendencies because despite its best intentions, the practice of humanitarianism often shows neocolonial tendencies. By claiming neutrality and universality, algorithms assert the superiority of abstract knowledge generated elsewhere. By embedding the logic of the powerful to determine what happens to people at the periphery, humanitarian AI becomes a neocolonial mechanism that acts in lieu of direct control.

As things stand, machine learning and so-called AI will not be any kind of

salvation for humanitarianism. Instead, it will deepen the already deep neocolonial and neoliberal dynamics of humanitarian institutions through algorithmic distortion.

But no apparatus is a closed system; the impact of machine learning is contingent and can be changed. This is as important for humanitarian AI as for AI generally – for, if an alternative technics is not mobilised by approaches such as people’s councils, the next generation of humanitarian scandals will be driven by AI.

Dan McQuillan does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.