The same people excel at object recognition through vision, hearing and touch – another reason to let go of the learning styles myth

Teachers want to connect with students in ways that help them learn. Government of Prince Edward Island, CC BY-NC-ND

The idea that individual people are visual, auditory or kinesthetic learners and learn better if instructed according to these learning styles is one of the most enduring neuroscience myths in education.

There is no proof of the value of learning styles as educational tools. According to experts, believing in learning styles amounts to believing in astrology. But this “neuromyth” keeps going strong.

A 2020 review of teacher surveys revealed that 9 out of 10 educators believe students learn better in their preferred learning style. There has been no decrease in this belief since the approach was debunked as early as 2004, despite efforts by scientists, journalists, popular science magazines, centers for teaching and YouTubers over that period. A cash prize offered since 2004 to whomever can prove the benefits of accounting for learning styles remains unclaimed.

Meanwhile, licensing exam materials for teachers in 29 states and the District of Columbia include information on learning styles. Eighty percent of popular textbooks used in pedagogy courses mention learning styles. What teachers believe can also trickle down to learners, who may falsely attribute any learning challenges to a mismatch between their instructor’s teaching style and their own learning style.

Myth of learning styles is resilient

Without any evidence to support the idea, why do people keep believing in learning styles?

One possibility is that people who have incomplete knowledge about the brain might be more susceptible to these ideas. For instance, someone might learn about distinct brain areas that process visual and auditory information. This knowledge may increase the appeal of models that include distinct visual and aural learning styles. But this limited understanding of how the brain works misses the importance of multisensory brain areas that integrate information across senses.

Another reason that people may stick with the belief about learning styles is that the evidence against the model mostly consists of studies that have failed to find support for it. To some people, this could suggest that enough good studies just haven’t been done. Perhaps they imagine that finding support for the intuitive – but wrong – notion of learning styles simply awaits more sensitive experiments, done in the right context, using the latest flavor of learning styles. Despite scientists’ efforts to improve the reputation of null results and encourage their publication, finding “no effect” may simply not capture attention.

But our recent research results do in fact contradict predictions from learning styles models.

We are psychologists who study individual differences in perception. We do not directly study learning styles, but our work provides evidence against models that split “visual” and “auditory” learners.

Object recognition skills related across senses

A few years ago, we became interested in why some people become visual experts more easily than others. We began measuring individual differences in visual object recognition. We tested people’s abilities in performing a variety of tasks like matching or memorizing objects from several categories such as birds, planes and computer-generated artificial objects.

Using statistical methods historically applied to intelligence, we found that almost 90% of the differences between people in these tasks were explained by a general ability we called “o” for object recognition. We found that “o” was distinct from general intelligence, concluding that book smarts may not be enough to excel in domains that rely heavily on visual abilities.

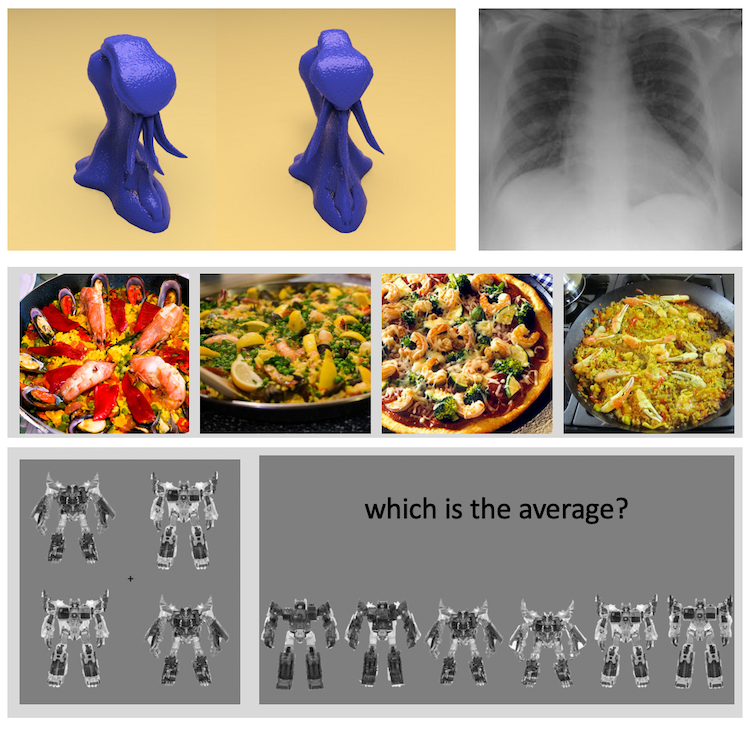

Examples of tasks that tap into object recognition ability, from top left: 1) Are these two objects identical despite the change in viewpoint? 2) Which lung has a tumor? 3) Which of these dishes is the oddball? 4) Which option is the average of the four robots on the right? Answers: 1) no 2) left 3) third 4) fourth.

Isabel Gauthier, CC BY-ND

Discussing this work with colleagues, they often asked whether this recognition ability was only visual. Unfortunately we just didn’t know, because the kinds of tests required to measure individual differences in object perception in nonvisual modalities did not exist.

To address the challenge, we chose to start with touch, because vision and touch share their ability to provide information about the shape of objects. We tested participants with a variety of new touch tasks, varying the format of the tests and the kinds of objects participants touched. We found that people who excelled at recognizing new objects visually also excelled at recognizing them by touch.

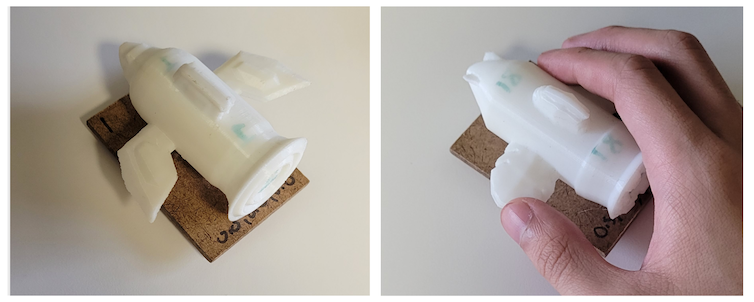

In a task measuring haptic object recognition ability, participants touch pairs of 3D-printed objects without looking at them and decide if they are exactly the same.

Isabel Gauthier

Moving from touch to listening, we were more skeptical. Sound is different from touch and vision and unfolds in time rather than space.

In our latest studies, we created a battery of auditory object recognition tests – you can test yourself. We measured how well people could learn to recognize different bird songs, different people’s laughs and different keyboard sounds.

Quite surprisingly, the ability to recognize by listening was positively correlated with the ability to recognize objects by sight – we measured the correlation at about 0.5. A correlation of 0.5 is not perfect, but it signifies quite a strong effect in psychology. As a comparison, the mean correlation of IQ scores between identical twins is around 0.86, between siblings around 0.47, and between cousins 0.15.

This relationship between recognition abilities in different senses stands in contrast to learning styles studies’ failure to find expected correlations among variables. For instance, people’s preferred learning styles do not predict performance on measures of pictorial, auditory or tactile learning.

Better to measure abilities than preferences?

The myth of learning styles is resilient. Fans stick with the idea and the perceived possible benefits of asking students how they prefer to learn.

Our results add something new to the mix, beyond evidence that accounting for learning preferences does not help, and beyond evidence supporting better teaching methods – like active learning and multimodal instruction – that actually do foster learning.

Our work reveals that people vary much more than typically expected in perceptual abilities, and that these abilities are correlated across touch, vision and hearing. Just as we can expect that a student excelling in English is likely also to excel in math, we should expect that the student who learns best from visual instruction may also learn just as well when manipulating objects. And because cognitive skills and perceptual skills are not strongly related, measuring them both can provide a more complete picture of a person’s abilities.

In sum, measuring perceptual abilities should be more useful than measuring perceptual preferences, because perceptual preferences consistently fail to predict student learning. It’s possible that learners may benefit from knowing they have weak or strong general perceptual skills, but critically, this has yet to be tested. Nevertheless, there remains no support for the “neuromyth” that teaching to specific learning styles facilitates learning.

![]()

Isabel Gauthier receives funding from the National Science Foundation.

Jason Chow does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.