Revolutionizing Creation on Roblox with Generative AI

Earlier this year, we shared our vision for generative artificial intelligence (AI) on Roblox and the intuitive new tools that will enable every user to become a creator. As these tools evolve rapidly across the industry, I wanted to provide some updates on the progress we’ve made, the road that’s still ahead to democratize generative AI creation, and why we think generative AI is a critical element for where Roblox is going.

Advances in generative AI and large language models (LLMs) present an incredible opportunity to unlock the future of immersive experiences by enabling easier, faster creation while maintaining safety and without requiring massive compute resources. Further, advances in AI models that are multimodal, meaning they are trained with multiple types of content—such as images, code, text, 3D models, and audio—open the door for new advances in creation tools. These same models are beginning to also produce multimodal outputs, such as a model that can create a text output, as well as some visuals that complement the text. We see these AI breakthroughs as an enormous opportunity to simultaneously increase efficiency for more experienced creators and to enable even more people to bring great ideas to life on Roblox. At this year’s Roblox Developers Conference (RDC), we announced several new tools that will bring generative AI into Roblox Studio and beyond to help anyone on Roblox scale faster, iterate more quickly, and augment their skills to create even better content.

Roblox Assistant

Roblox has always provided creators with the tools, services, and support they need to build immersive 3D experiences. At the same time, we’ve seen our creators begin to use third-party generative and conversational AI to help them create. While they are useful to help reduce the creator’s workload, these off-the-shelf versions were not designed for end-to-end Roblox workflows or trained on Roblox code, slang, and lingo. That means creators face significant additional work to use these versions to create content for Roblox. We have been working on ways to bring the value of these tools into Roblox Studio, and at RDC we shared an early example of Assistant.

Assistant is our conversational AI that enables creators of all skill levels to spend significantly less time on the mundane, repetitive tasks involved in creating and more time on high-value activities, like narrative, game-play, and experience design. Roblox is uniquely positioned to build this conversational AI model for immersive 3D worlds, thanks to our access to a large set of public 3D models to train on, our ability to integrate a model with our platform APIs, and our growing suite of innovative AI solutions. Creators will be able to use natural language text prompts to create scenes, edit 3D models, and apply interactive behaviors to objects. Assistant will support the three phases of creation: learning, coding, and building:

Learning: Whether a creator is brand-new to developing on Roblox or a seasoned veteran, Roblox Assistant will help answer questions across a wide range of surfaces using natural language.

Coding: Assistant will expand on our recent Code Assist tool. For example, developers could ask Assistant to improve their code, explain a section of code, or help debug and suggest fixes for code that isn’t working properly.

Building: Assistant will help creators rapidly prototype new ideas. For example, a new creator could generate entire scenes and try out different versions simply by typing a prompt like “Add some streetlights along this road” or “Make a forest with different kinds of trees. Now add some bushes and flowers.”

Working with Assistant will be collaborative, interactive, and iterative, enabling creators to provide feedback and have Assistant work to provide the right solution. It will be like having an expert creator as a partner that you can bounce ideas off of and try out ideas until you get it right.

frameborder=”0″ allow=”accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share” allowfullscreen>

To make Assistant the best partner it can be, we made another announcement at RDC: We invited developers to opt in to contribute their anonymized Luau script data. This script data will help make our AI tools, like Code Assist and Assistant, significantly better at suggesting and creating more efficient code, giving back to the Roblox developers who use them. Further, if developers opt to share beyond Roblox, their script data will be added to a data set made available to third parties to train their AI chat tools to be better at suggesting Luau code, giving back to Luau developers everywhere.

To be clear, through comprehensive user research and transparent conversations with top developers, we’ve designed this to be opt-in and will help ensure that all participants understand and consent to what the program entails. As a thank you to those who choose to participate in sharing script data with Roblox, we will grant access to the more powerful versions of Assistant and Code Assist that are powered by this community-trained model. Those who haven’t opted-in will continue to have access to our existing version of Assistant and Code Assist.

Easier Avatar Creation

Ultimately, we want each of our 65.5 million daily users to have an avatar that truly represents them and expresses who they are. We recently released the ability for our UGC Program members to create and sell both avatar bodies and standalone heads. Today, that process requires access to Studio or our UGC Program, a fairly high level of skill, and multiple days of work to enable facial expression, body movement, 3D rigging, etc. This makes avatars time-consuming to create and has, to date, limited the number of options available. We want to go even further.

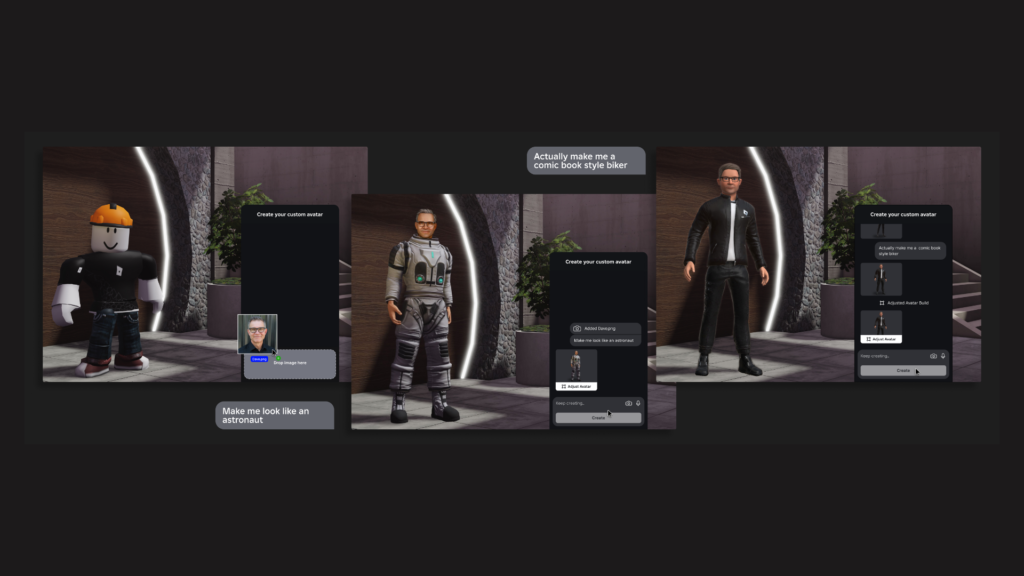

To enable everyone on Roblox to have a personalized, expressive avatar, we need to make avatars very easy to generate and customize. At RDC, we announced a new tool we’re releasing in 2024 that will enable easy creation of a custom avatar from an image or from several images. With this tool, any creator with access to Studio or our UGC program will be able to upload an image, have an avatar created for them, and then modify it as they like. Longer term, we intend to also make this available directly within experiences on Roblox.

To make this possible, we are training AI models on Roblox’s avatar schema and a set of Roblox-owned 3D avatar models. One approach leverages research for generating 3D stylized avatars from 2D images. We are also looking at using pre-trained text-to-image diffusion models to augment limited 3D training data with 2D generative techniques, and using a generative adversarial network (GAN)-based 3D generation network for training. Finally, we are working on using ControlNet to layer in predefined poses to guide the resulting multi-view images of the avatars.

This process produces a 3D mesh for the avatar. Next, we leverage 3D semantic segmentation research, trained on 3D avatar poses, to take that 3D mesh and adjust it to add appropriate facial features, caging, rigging, and textures, in essence, making the static 3D mesh into a Roblox avatar. Finally, a mesh-editing tool allows users to morph and adjust the model to make it look more like the version they are imagining. And all of this happens fast—within minutes—generating a new avatar that can be imported into Roblox and used in an experience.

frameborder=”0″ allow=”accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share” allowfullscreen>

Moderating Voice Communication

AI for us isn’t just about creation, it’s also a much more efficient system for ensuring a diverse, safe, and civil community, at scale. As we begin to roll out new voice features, including voice chat and Roblox Connect, the new calling as your avatar feature, and APIs announced at RDC, we face a new challenge—moderating spoken language in real time. The current industry standard for this is a process known as Automatic Speech Recognition (ASR), which essentially takes an audio file, transcribes it to convert it into text, then analyzes the text to look for inappropriate language, keywords, etc.

This works well for companies using it at a smaller scale, but as we explored using this same ASR process to moderate voice communication, we quickly realized that it’s difficult and inefficient at our scale. This approach also loses incredibly valuable information that’s encoded in a speaker’s volume and tone of voice, as well as the broader context of the conversation. Of the millions of minutes of conversation we’d have to transcribe every day, across different languages, only a very small percentage would even possibly sound like something inappropriate. And as we continue to scale, that system would require more and more compute power to keep up. So we took a closer look at how we could do this more efficiently, by building a pipeline that goes directly from the live audio to labeling content to indicate whether it violates our policies or not.

Ultimately, we were able to build an in-house custom voice-detection system by using ASR to classify our in-house voice data sets, then use that classified voice data to train the system. More specifically, to train this new system, we begin with audio and create a transcript. We then run the transcript through our Roblox text filter system to classify the audio. This text filter system is great at detecting policy-violating language on Roblox since we’ve been optimizing this same filter system for years on Roblox-specific slang, abbreviations, and lingo. At the end of these layers of training, we have a model that’s capable of detecting policy violations directly from audio in real time.

While this system does have the ability to detect specific keywords such as profanity, policy violations are rarely just one word. One word can often seem problematic in one context and just fine in a different context. Essentially, these types of violations involve what you’re saying, how you’re saying it, and the context in which the statements are made.

To get better at understanding context, we leverage the native power of a transformer-based architecture, which is very good at sequence summarization. It can take a sequence of data, like an audio stream, and summarize it for you. This architecture enables us to preserve a longer audio sequence so we can detect not only words but also context and intonations. Once all of these elements come together, we have a final system where the input is audio and the output is a classification—violates policy or doesn’t. This system can detect keywords and policy-violating phrases, but also tone, sentiment, and other context that’s important to determine intent. This new system, which detects policy-violating speech directly from audio, is significantly more compute efficient than a traditional ASR system, which will make it much easier to scale as we continue to reimagine how people come together.

We also needed a new way to warn those on our voice communication tools of the potential consequences of this type of language. With this innovative detection system at our disposal, we are now experimenting with ways to affect online behavior to maintain a safe environment. We know people sometimes violate our policies unintentionally and we want to understand if an occasional reminder might help prevent further offenses. To help with this, we are experimenting with real-time user feedback through notifications. If the system detects that you’ve said something that violates our policies some number of times, we’ll display a pop-up notification on your screen informing you that your language violates our policies and directs you to our policies for more information.

Voice stream notifications are just one element of the moderation system, however. We also look at behavioral patterns on the platform, as well as complaints from others on Roblox, to drive our overall moderation decisions. The aggregate of these signals could result in stronger consequences, including having access to audio features revoked, or for more serious infractions, being banned from the platform entirely. Keeping our community safe and civil is critical as these advances in multimodal AI models, generative AI, and LLMs come together to enable incredible new tools and capabilities for creators.

We believe that providing creators with these tools will both lower the barrier to entry for less experienced creators and free more experienced creators from the more tedious tasks of this process. This will allow them to spend more time on the inventive aspects of fine-tuning and ideating. Our goal with all of this is to enable everyone, everywhere to bring their ideas to life and to vastly increase the diversity of avatars, items, and experiences available on Roblox. We are also sharing information and tools to help protect new creations.

We’re already imagining amazing possibilities: Say someone is able to create an avatar doppelganger directly from a photo, they could then customize their avatar to make them taller or render them in anime style. Or they could build an experience by asking Assistant to add cars, buildings, and scenery, set lighting or wind conditions, or change the terrain. From there, they could iterate to refine things just by typing back and forth with Assistant. We know the reality of what people create with these tools, as they become available, will go well beyond what we can even imagine.

The post Revolutionizing Creation on Roblox with Generative AI appeared first on Roblox Blog.