NASA’s Mars rovers could inspire a more ethical future for AI

Rather than using AI to replace workers, companies can build teams that ethically integrate the technology. Yuichiro Chino/Moment via Getty Images

Since ChatGPT’s release in late 2022, many news outlets have reported on the ethical threats posed by artificial intelligence. Tech pundits have issued warnings of killer robots bent on human extinction, while the World Economic Forum predicted that machines will take away jobs.

The tech sector is slashing its workforce even as it invests in AI-enhanced productivity tools. Writers and actors in Hollywood are on strike to protect their jobs and their likenesses. And scholars continue to show how these systems heighten existing biases or create meaningless jobs – amid myriad other problems.

There is a better way to bring artificial intelligence into workplaces. I know, because I’ve seen it, as a sociologist who works with NASA’s robotic spacecraft teams.

The scientists and engineers I study are busy exploring the surface of Mars with the help of AI-equipped rovers. But their job is no science fiction fantasy. It’s an example of the power of weaving machine and human intelligence together, in service of a common goal.

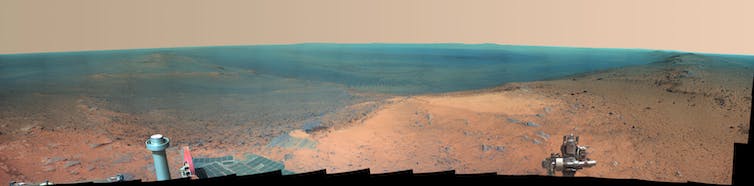

Mars rovers act as an important part of NASA’s team, even while operating millions of miles away from their scientist teammates.

NASA/JPL-Caltech via AP

Instead of replacing humans, these robots partner with us to extend and complement human qualities. Along the way, they avoid common ethical pitfalls and chart a humane path for working with AI.

The replacement myth in AI

Stories of killer robots and job losses illustrate how a “replacement myth” dominates the way people think about AI. In this view, humans can and will be replaced by automated machines.

Amid the existential threat is the promise of business boons like greater efficiency, improved profit margins and more leisure time.

Empirical evidence shows that automation does not cut costs. Instead, it increases inequality by cutting out low-status workers and increasing the salary cost for high-status workers who remain. Meanwhile, today’s productivity tools inspire employees to work more for their employers, not less.

Alternatives to straight-out replacement are “mixed autonomy” systems, where people and robots work together. For example, self-driving cars must be programmed to operate in traffic alongside human drivers. Autonomy is “mixed” because both humans and robots operate in the same system, and their actions influence each other.

Self-driving cars, while operating without human intervention, still require training from human engineers and data collected by humans.

AP Photo/Tony Avelar

However, mixed autonomy is often seen as a step along the way to replacement. And it can lead to systems where humans merely feed, curate or teach AI tools. This saddles humans with “ghost work” – mindless, piecemeal tasks that programmers hope machine learning will soon render obsolete.

Replacement raises red flags for AI ethics. Work like tagging content to train AI or scrubbing Facebook posts typically features traumatic tasks and a poorly paid workforce spread across the Global South. And legions of autonomous vehicle designers are obsessed with “the trolley problem” – determining when or whether it is ethical to run over pedestrians.

But my research with robotic spacecraft teams at NASA shows that when companies reject the replacement myth and opt for building human-robot teams instead, many of the ethical issues with AI vanish.

Extending rather than replacing

Strong human-robot teams work best when they extend and augment human capabilities instead of replacing them. Engineers craft machines that can do work that humans cannot. Then, they weave machine and human labor together intelligently, working toward a shared goal.

Often, this teamwork means sending robots to do jobs that are physically dangerous for humans. Minesweeping, search-and-rescue, spacewalks and deep-sea robots are all real-world examples.

Teamwork also means leveraging the combined strengths of both robotic and human senses or intelligences. After all, there are many capabilities that robots have that humans do not – and vice versa.

For instance, human eyes on Mars can only see dimly lit, dusty red terrain stretching to the horizon. So engineers outfit Mars rovers with camera filters to “see” wavelengths of light that humans can’t see in the infrared, returning pictures in brilliant false colors.

Mars rovers capture images in near infrared to show what Martian soil is made of.

NASA/JPL-Caltech/Cornell Univ./Arizona State Univ

Meanwhile, the rovers’ onboard AI cannot generate scientific findings. It is only by combining colorful sensor results with expert discussion that scientists can use these robotic eyes to uncover new truths about Mars.

Respectful data

Another ethical challenge to AI is how data is harvested and used. Generative AI is trained on artists’ and writers’ work without their consent, commercial datasets are rife with bias, and ChatGPT “hallucinates” answers to questions.

The real-world consequences of this data use in AI range from lawsuits to racial profiling.

Robots on Mars also rely on data, processing power and machine learning techniques to do their jobs. But the data they need is visual and distance information to generate driveable pathways or suggest cool new images.

By focusing on the world around them instead of our social worlds, these robotic systems avoid the questions around surveillance, bias and exploitation that plague today’s AI.

The ethics of care

Robots can unite the groups that work with them by eliciting human emotions when integrated seamlessly. For example, seasoned soldiers mourn broken drones on the battlefield, and families give names and personalities to their Roombas.

I saw NASA engineers break down in anxious tears when the rovers Spirit and Opportunity were threatened by Martian dust storms.

Some people feel a connection to their robot vacuums, similar to the connection NASA engineers feel to Mars rovers.

nikolay100/iStock / Getty Images Plus via Getty Images

Unlike anthropomorphism – projecting human characteristics onto a machine – this feeling is born from a sense of care for the machine. It is developed through daily interactions, mutual accomplishments and shared responsibility.

When machines inspire a sense of care, they can underline – not undermine – the qualities that make people human.

A better AI is possible

In industries where AI could be used to replace workers, technology experts might consider how clever human-machine partnerships could enhance human capabilities instead of detracting from them.

Script-writing teams may appreciate an artificial agent that can look up dialog or cross-reference on the fly. Artists could write or curate their own algorithms to fuel creativity and retain credit for their work. Bots to support software teams might improve meeting communication and find errors that emerge from compiling code.

Of course, rejecting replacement does not eliminate all ethical concerns with AI. But many problems associated with human livelihood, agency and bias shift when replacement is no longer the goal.

The replacement fantasy is just one of many possible futures for AI and society. After all, no one would watch “Star Wars” if the ‘droids replaced all the protagonists. For a more ethical vision of humans’ future with AI, you can look to the human-machine teams that are already alive and well, in space and on Earth.

![]()

Janet Vertesi has consulted for NASA teams. She receives funding from the National Science Foundation.