Artificial intelligence is now part of our everyday lives – and its growing power is a double-edged sword

AI-generated images of "a stained glass window with an image of a blue strawberry". OpenAI

A major new report on the state of artificial intelligence (AI) has just been released. Think of it as the AI equivalent of an Intergovernmental Panel on Climate Change report, in that it identifies where AI is at today, and the promise and perils in view.

From language generation and molecular medicine to disinformation and algorithmic bias, AI has begun to permeate every aspect of our lives.

The report argues that we are at an inflection point where researchers and governments must think and act carefully to contain the risks AI presents and make the most of its benefits.

A century-long study of AI

The report comes out of the AI100 project, which aims to study and anticipate the effects of AI rippling out through our lives over the course of the next 100 years.

AI100 produces a new report every five years: the first was published in 2016, and this is the second. As two points define a line, this second report lets us see the direction AI is taking us in.

One of us (Liz Sonenberg) is a member of the standing committee overseeing the AI100 project, and the other (Toby Walsh) was on the study panel that wrote this particular report. Members of the panel came from across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

AI100 standing committee chair Peter Stone takes a shot against a robot goalie at RoboCup 2019 in Sydney.

RoboCup / YouTube

The promises and perils of AI are becoming real

The report highlights the remarkable progress made in AI over the past five years. AI is leaving the laboratory and has entered our lives, having a “real-world impact on people, institutions, and culture”. Read the news on any given day and you’re likely to find multiple stories about some new advance in AI or some new use of AI.

For example, in natural language processing (NLP), computers can now analyse and even generate realistic human language. To demonstrate, we asked Open AI’s GPT-3 system, one of the largest neural networks ever built, to summarise the AI100 report for you. It did a pretty good job, even if the summary confronts our sense of self by being written in the first person:

In the coming decade, I expect that AI will play an increasingly prominent role in the lives of people everywhere. AI-infused services will become more common, and AI will become increasingly embedded in the daily lives of people across the world.

I believe that this will bring with it great economic and societal benefits, but that it will also require us to address the many challenges to ensure that the benefits are broadly shared and that people are not marginalised by these new technologies.

A key insight of AI research is that it is easier to build things than to understand why they work. However, defining what success looks like for an AI application is not straightforward.

For example, the AI systems that are used in healthcare to analyse symptoms, recommend diagnoses, or choose treatments are often far better than anything that could be built by a human, but their success is hard to quantify.

Read more:

GPT-3: new AI can write like a human but don’t mistake that for thinking – neuroscientist

As a second example of the recent and remarkable progress in AI, consider the latest breakthrough from Google’s DeepMind. AlphaFold is an AI program that provides a huge step forward in our ability to predict how proteins fold.

This will likely lead to major advances in life sciences and medicine, accelerating efforts to understand the building blocks of life and enabling quicker and more sophisticated drug discovery. Most of the planet now knows to their cost how the unique shape of the spike proteins in the SARS-CoV-2 virus are key to its ability to invade our cells, and also to the vaccines developed to combat its deadly progress.

The AI100 report argues that worries about super-intelligent machines and wide-scale job loss from automation are still premature, requiring AI that is far more capable than available today. The main concern the report raises is not malevolent machines of superior intelligence to humans, but incompetent machines of inferior intelligence.

Once again, it’s easy to find in the news real-life stories of risks and threats to our democratic discourse and mental health posed by AI-powered tools. For instance, Facebook uses machine learning to sort its news feed and give each of its 2 billion users an unique but often inflammatory view of the world.

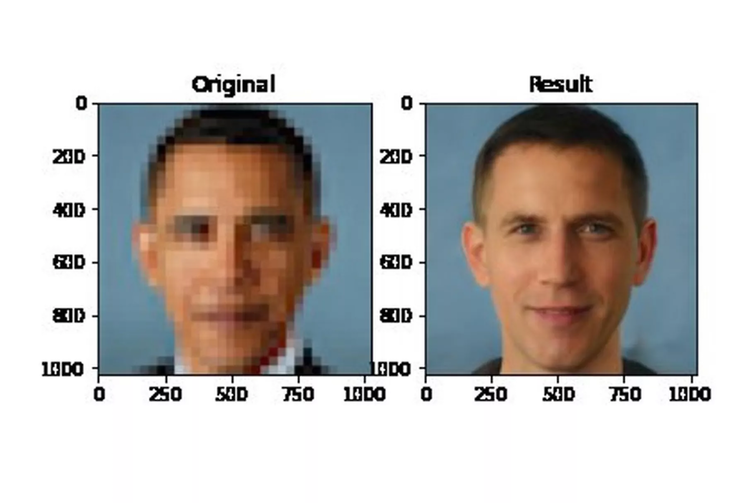

Algorithmic bias in action: ‘depixelising’ software makes a photo of former US president Barack Obama appear ethnically white.

Twitter / Chicken3gg

The time to act is now

It’s clear we’re at an inflection point: we need to think seriously and urgently about the downsides and risks the increasing application of AI is revealing. The ever-improving capabilities of AI are a double-edged sword. Harms may be intentional, like deepfake videos, or unintended, like algorithms that reinforce racial and other biases.

AI research has traditionally been undertaken by computer and cognitive scientists. But the challenges being raised by AI today are not just technical. All areas of human inquiry, and especially the social sciences, need to be included in a broad conversation about the future of the field. Minimising negative impacts on society and enhancing the positives requires consideration from across academia and with societal input.

Governments also have a crucial role to play in shaping the development and application of AI. Indeed, governments around the world have begun to consider and address the opportunities and challenges posed by AI. But they remain behind the curve.

A greater investment of time and resources is needed to meet the challenges posed by the rapidly evolving technologies of AI and associated fields. In addition to regulation, governments also need to educate. In an AI-enabled world, our citizens, from the youngest to the oldest, need to be literate in these new digital technologies.

At the end of the day, the success of AI research will be measured by how it has empowered all people, helping tackle the many wicked problems facing the planet, from the climate emergency to increasing inequality within and between countries.

AI will have failed if it harms or devalues the very people we are trying to help.

Liz Sonenberg has received funding from the Australian Research Council for several projects in the AI domain. She is a member of the AI100 Standing Committee (https://ai100.stanford.edu/people-0) that commissioned the report discussed in this article.

Toby Walsh receives funding from the Australian Research Council for a project in Trustworthy AI. He was one of the 17 members of the AI100 Study Panel that produced the report described in this article.