No, artificial intelligence won’t steal your children’s jobs – it will make them more creative and productive

The South Korean go player Lee Sedol after a 2016 match against Google's artificial-intelligence program AlphaGo. Sedol, ranked 9th in the world, lost 4-1. Lee Jin-man/Flickr, CC BY

“Whatever your job is, the chances are that one of these machines can do it faster or better than you can.”

No, this is not a 2018 headline about self-driving cars or one of IBM’s new supercomputers. Instead, it was published by the Daily Mirror in 1955, when a computer took as much space as a large kitchen and had less power than a pocket calculator. They were called “electronic brains” back then, and evoked both hope and fear. And more than 20 years later, little had changed: In a 1978 BBC documentary about silicon chips, one commentator argued that “They are the reason why Japan is abandoning its shipbuilding and why our children will grow up without jobs to go to”.

Artificial intelligence hype is not new

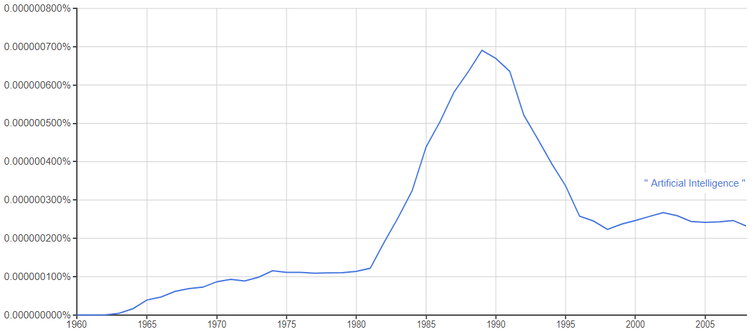

If one types “artificial intelligence” (AI) on Google Books’ Ngram Viewer – a tool that allows us to check how often a term was printed in a book between 1800 and 2008 – we can clearly see that our modern-day hype, optimism and deep concern about AI are by no means a novelty.

Searches for the term ‘artificial intelligence’ on Google Books’ Ngram viewer.

Author provided

The history of AI is a long series of booms and busts. The first “AI spring” took place between 1956 and 1974, with pioneers such as the young Marvin Minsky. This was followed by the “first AI winter” (1974-1980), when disillusion with the gap between machine learning and human cognitive capacities first led to disinvestment and disinterest in the topic. A second boom (1980-1987) was followed by another “winter” (1987-2001). Since the 2000s we’ve been surfing the third “AI spring”.

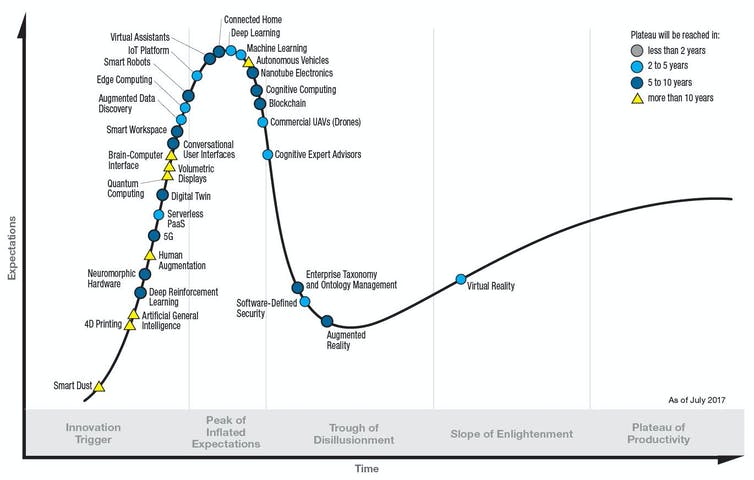

There’s plenty of reasons to believe this latest wave of interest for AI is going to be more durable. According to Gartner Research, technologies typically go from a “peak of inflated expectations” through a “trough of disillusionment” until they finally reach a “plateau of productivity”. AI-intensive technologies such as virtual assistants, the Internet of Things, smart robots and augmented data discovery are about to reach the peak. Deep learning, machine learning and cognitive expert advisors are expected to reach the plateau of mainstream applications in two to five years.

Narrow intelligence

We finally seem to have enough computing power to credibly develop what is called “narrow AI”, of which all the aforementioned technologies are an example. These are not to be confused with “artificial general intelligence” (AGI), which scientist and futurologist Ray Kurzweil called “strong AI”. Some of the most advanced AI systems to date, such as IBM’s Watson supercomputer or Google’s AlphaGo, are examples of narrow AI. They can be trained to perform complex tasks such as identifying cancerous skin patterns or playing the ancient Chinese strategy game of Go. They are very far, however, from being capable to do everyday general intelligence tasks such as gardening, arguing or inventing a children’s story.

The cautionary prophecies of visionaries like Elon Musk, Bill Gates and Stephen Hawking against AI really are meant as an early warning against the dangers of AGI, but that is not something our children will be confronted with. Their immediate partners will be of the narrow AI kind. The future of labour depends on how well we equip them to use computers as cognitive partners .

Hype cycle for emerging technologies.

Gartner Research

Better together

Garry Kasparov – the chess grandmaster who was defeated by IBM’s Deep Blue computer in 1997 – calls this human-machine cooperation “augmented intelligence”. He compares this “augmentation” to the mythic image of a centaur: combine a quadruped’s horsepower with the intuition of a human mind. To illustrate the potential of centaurs, he describes a freestyle chess tournament in 2005 in which any combination of human-machine teams was possible. In his words:

“The winner was revealed to be not a grandmaster with a state-of-the-art PC but a pair of amateur American chess players using three computers at the same time. Their skill at manipulating and ‘coaching’ their computers to look very deeply into positions effectively counteracted the superior chess understanding of their grand-master opponents and the greater computational power of other participants. Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process. Human strategic guidance combined with the tactical acuity of a computer was overwhelming.”

Human-machine cognitive partnerships can amplify what each partner does best: humans are great at making intuitive and creative decisions based on knowledge while computers are good at sifting through large amounts of data to produce information that will feed into human knowledge and decision making. We use this combination of narrow AI and human unique cognitive and motor skills every day, often without realising it. A few examples:

Using Internet search engines to find content (videos, images, articles) that will be helpful in preparing for a school assignment. Then combining them in creative ways in a multimedia slide presentation.

Using a translation algorithm to produce a first draft of a document in a different language, then manually improving the style and grammar of the final document.

Driving a car to an unknown destination using a smartphone GPS application to navigate through alternative routes based on real-time traffic information;

Relying on a movie-streaming platform to shortlist films you are going to appreciate based on your recent history; making the final choice based on mood, social context, serendipity.

Netflix is a great example of this collaboration at its best. By using machine-learning algorithms to analyse how often and how long people watch their content, they can determine how engaging each story component is to certain audiences. This information is used by screenwriters, producers and directors to better understand what and how to create new content. Virtual-reality technology allows content creators to experiment with different storytelling perspectives before they ever shoot a single scene.

Likewise, architects can rely on computers to adjust the functional aspects of their work. Software engineers can focus on the overall systems structure while machines provide ready-to-use code snippets and libraries to speed up the process. Marketers rely on big data and visualisation tools to determine how to better understand customer needs and develop better products and services. None of these tasks could be accomplished by AI without human guidance. Conversely, human creativity and productivity have been enormously leveraged by this AI support, allowing to achieve better quality solutions at lower costs.

Losses and gains

As innovation accelerates, thousands of jobs will disappear, just as it has happened in the previous cycles of industrial revolutions. Machines powered by narrow AI algorithms can already perform certain 3D tasks (“dull, dirty and dangerous”) much better than humans. This may create enormous pain for those who are losing their jobs over the next few years, particularly if they don’t acquire the computer-related skills that would enable them to find more creative opportunities. We must learn from the previous waves of creative destruction if we are to mitigate human suffering and increasing inequality.

For example, some statistics indicate that as much as 3% of the population in developed countries work as drivers. When automated cars become a reality in the next 15 to 25 years, we must offer people who will be “structurally unemployed” some sort of compensation income, training and re-positioning opportunities.

Fortunately, the Schumpeterian waves of destructive innovation also create jobs. History has shown that disruptive innovations are not always a zero-sum game. On the long run, the loss of low-added-value jobs to machines can have a positive impact in the overall quality of life of most workers. The ATM paradox is a good example of this. As the use of automatic teller machines spread in the 1980s and ‘90s, many predicted massive unemployment in the banking sector. Instead, ATMs created more jobs as the cost of opening new agencies decreased. The number of agencies multiplied, as did the portfolio of banking products. Thanks to automation, going to the bank offers a much better customer experience than in previous decades. And the jobs in the industry became better paid and were of better quality.

A similar phenomenon happened with the textile industry in the 19th century. Better human-machine coordination leveraged productivity and created customer value, increasing the overall market size and creating new employment opportunities. Likewise, we may predict that as low-quality jobs continue to disappear, AI-assisted jobs will emerge to fulfil the increasing demand for more productive, ecological and creative products. More productivity may mean shorter work weeks and more time for family and entertainment, which may lead to more sustainable forms of value creation and, ultimately, more jobs.

Adapting to the future

This optimist scenario assumes, however, that education systems will do a better job of preparing our children to become good at what humans do best: creative and critical thinking. Less learning-by-heart (after all, most information is one Google search away) and more learning-by-doing. Fewer clerical skills and more philosophical insights about human nature and how to cater to its infinite needs for art and culture. As Apple founder and CEO Steve Jobs famously said:

“What made the Macintosh great was that the people working on it were musicians and poets and artists and zoologists and historians who also happened to be the best computer scientists in the world.”

Apple founder and CEO Steve Jobs.

To become creative and critical thinkers, our children will need knowledge and wisdom more than raw data points. They need to ask “why?”, “how?” and “what if?” more often than “what?”, who?“ and “when?” And they must construct this knowledge by relying on databases as cognitive partners as soon as they learn how to read and write. Constructivist methods such as the “flipped classroom” approach are a good step in that direction. In flipped classrooms, students are told to search for specific contents on the web at home and to come to class ready to apply what they learned in a collaborative project supervised by the teacher. Thus they do their “homework” (exercise) in class and they have web “lectures” at home, optimising class time to do what computers cannot help them to do: create, develop and apply complex ideas collaboratively with their peers.

Thus, the future of human-machine collaboration looks less like the scenario in the Terminator movies and more like a Minority Report-style of “augmented intelligence”. There will be jobs if we adapt the education system to equip our children to do what humans are good at: to think critically and creatively, to develop knowledge and wisdom, to appreciate and create beautiful works of art. That does not mean it will be a painless transition. Machines and automation will likely take away millions of low-quality jobs as it has happened in the past. But better-quality jobs will likely replace them, requiring less physical effort and shorter hours to deliver better results. At least until artificial general intelligence becomes a reality – then all bets are off. But this will likely be our great-grandchildren’s problem.

Marcos Lima does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.